Chapter 4: Evolution of a benchmark application - The Virtual Gazebo Prototype

The purpose of this research was to combine immersion and CVEs to study SHC (see Chapter2) and in particular closely-coupled collaboration. This chapter introduces a structured task of building a gazebo which was designed in order to examine distinct scenarios of closely-coupled collaboration and as a benchmark for further investigations. The following sections describe how it was tested and how findings lead to a rethink as well as redesign. Results of user trials are discussed in chapter 6 to keep the design process and experimentation of this benchmark application separated. The Virtual Gazebo design, implementation and testing were performed by two members of the research team, including this author. The detailed analysis of event handling and consistency control was done by Robin Wolff [Wolff, 2006], but some of the development inside is presented here in order to give the reader some understanding of the decision making during the application development and testing. But first a small introduction into CVEs shall be given introducing the basic principals and there support for closely-coupled collaboration.

4.1 Immersive displays connected via CVEs

Many team related tasks in the real world centre around the shared manipulation of objects. A group of geographically remote users can be brought into social proximity to interactively share virtual objects within a Collaborative Virtual Environment (CVE). CVEs are extensively used to support applications as diverse as medical treatment, military training, online games, and social meeting places [Becker et al., 1998; Pausch et al., 1992; Prasolova-Førland, 2005; Roberts, 2003].

Advances in immersive display devices are ensuring their acceptance in industry as well as research [Brooks, 1999]. Natural body and head movement may be used to view an object from every angle within an immersive display. The object may be reached for and manipulated with the outstretched hand, usually through holding some input device. The feeling of presence, and particularly the naturalness of interaction with objects, may be improved when the user can see the own body in the context of the virtual environment. Immersive Projection Technology (IPT) projects images onto one or more screens. CAVE-like IPT displays, such as a CAVE(TM) or ReaCTor(TM), surround the user with interactive stereo images, thus placing his body in a natural spatial context within the environment.

By linking CAVE-like displays through a CVE infrastructure, a user may be physically situated within a virtual scene representing a group of remote users congregated around a shared object. This allows each team member to use their body within the space to interact with other members of the team and virtual objects. The spoken word is supplemented by non-verbal communication in the form of pointing to, manipulating and interacting with the objects as well as turning to people, use of gestures and other forms of body language. This offers unprecedented naturalness of interaction and remote collaboration.

4.2 Principles of Distribution within CVEs

A key requirement of Virtual Reality (VR) is the responsiveness of the local system. Delays in representing a perspective change following a head movement are associated with disorientation and feelings of nausea [Lin et al., 2002; Meehan et al., 2003]. A CVE system supports a potentially unlimited reality across a number of resource-bounded computers interconnected by a network. The network, however, can induce perceivable delays in updating a distributed simulation [Roberts et al., 1996]. Key goals of a CVE are to maximise responsiveness and scalability while minimising latency. This is achieved through localisation and scaling [Roberts, 2003].

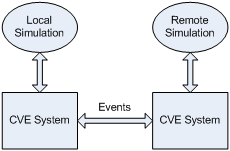

Localisation is achieved through replicating the environment, including shared information objects and avatars, on each user's machine. Sharing experience requires that replications be kept consistent. This is achieved by sending changes across the network in the form of events (see Figure 4-1 for an illustration of localisation). Localisation goes further than simply replicating that state of the environment; it also includes the predictable behaviour of objects within it. Scaling limits the number and complexity of objects held on each machine and is generally driven by user interest [Greenhalgh, 1999]. The organisation and content of a scenegraph[1] is optimised for the rendering of images. Although some systems [Park et al., 2000; Wolff et al., 2004] directly link scenegraph nodes across the network, most systems introduce a second object graph to deal with issues of distribution, known as the replicated object model. Objects contain state information and may link to corresponding objects within the local scenegraph.

A virtual environment is composed of objects, which may be brought to life through their behaviour and interaction. Some objects will be static and have no behaviour. Some will have behaviour driven from the real world, for example users. Alternatively, object behaviour may be procedurally defined in some computer program. In order to make a CVE attractive and productive to use it must support interaction that is sufficiently intuitive, reactive, responsive, detailed and consistent [Roberts, 2003]. By replicating object behaviour we reduce dependency on the network and therefore make better use of available bandwidth and increase responsiveness [Roberts, 2003]. Early systems replicated object states, but not their behaviour. Each state change to any object was sent across the network to every replica of that object.

4.3 Object interaction via CVEs

Most team work tasks require communication and many tasks, especially in design and science, require communication through and around shared objects. For example, a heavy table may require at least two people to carry it. The shared manipulation of objects requires consensus both at the human and system level. People need to agree where to carry an object and the CVE system needs to prevent network effects form producing confusingly divergent states of the object to each person. The latter issue was addressed for sequential sharing of objects within a ball game [Roberts et al., 1999]. The advanced ownership transfer allows instantaneous exchange of a ball between players in competitive scenarios. In IEEE 1516, concurrency control is defined to allow various attributes of a given object to be affected concurrently by distinct users. Sharkey et al. describe optimisations above the standard that allow control of an artefact to be passed to a remote user with little or no delay [Sharkey et al., 1998]. Elsewhere, a hierarchy of three concurrency control mechanisms is presented in Linebarger & Kessler [2004] to tailor the problem of “surprising” changes during closely-coupled collaboration. A virtual tennis game is played between remote sites in Molet et al. and Basdogan et al. investigating the importance of haptic interfaces for collaborative tasks in virtual environments [Basdogan et al., 2000; Molet et al., 1999]. The authors stated that finding a general solution to supporting various collaborative haptic tasks over a network may be “too hard”. A distinction is made between concurrent and sequential interaction with shared objects but this is not discussed further. As with [Choi et al., 1997] a spring model is used to overcome network latencies to support concurrent manipulation of a shared object. Four classes of shared behaviour: autonomous behaviours, synchronised behaviours, independent interactions and shared interaction are introduced by [Broll, 1997]. Levels of cooperation within CVEs have been categorised by a number of research groups in similar ways: Margery et al. described the different levels of cooperation as level 1 - co-existence and shared-perception; level 2 - individual modification of the scene; and level 3 - simultaneous interactions with an object [Margery et al., 1999]. A similar taxonomy was presented for haptic collaboration that describes the respective levels as static, collaborative and cooperative [Buttolo et al., 1997]. Our studies provide a more detailed taxonomy of level 3, which will be described in section 4.4.

Much research has been dedicated to the development of CVE systems and toolkits. Some relevant examples are MASSIVE [Greenhalgh et al., 1995], CAVERNsoft [Johnson et al., 1998], DIVE [Frecon, 2004], NPSNET [Macedonia et al., 1994], PaRADE [Roberts et al., 1996] and VR Juggler [Bierbaum, 2001]. The DIVE system is an established testbed for experimentation of collaboration in virtual environments and, after three major revisions, remains an effective benchmark. The COVEN project [Frécon et al., 2001] undertook network trials of large scale collaborative applications run over the DIVE [Frecon, 2004] CVE infrastructure. This produced a detailed analysis of network induced behaviour in CVE applications [Greenhalgh et al., 2001]. DIVE was ported to cave-like display systems [Steed et al., 2001] and consequently an experiment on a non-coupled interaction task with two users in different CAVE-like displays was found to be very successful [Schroeder et al., 2001]. A stretcher application was implemented above DIVE, that investigated the carrying of a stretcher by allowing the material to follow the handles [Mortensen et al., 2002]. The work concludes that, although the Internet-2 has sufficient bandwidth and levels of latency to support joint manipulation of shared objects, the CVE did not adequately address the consistency issues arising from the network characteristics.

Several studies have investigated the effect of linking various combinations of display system on collaboration. It was found that immersed users naturally adopted dominant roles [Slater et al., 2000a]. A recent study by Schoeder [Schroeder et al., 2001], again using DIVE, investigated the effect of display type on collaboration of a distributed team. This work extended the concept of a Rubik’s cube by splitting the composite cube such that two people could concurrently interact with individual component cubes while observing each other’s actions. The study compared three conditions based on display combinations: two linked CAVE-like displays (symmetric combination); face-to-face; and a CAVE-like display linked to a desktop (asymmetric combination). An important finding was that the asymmetry between users of the different systems affects their collaboration and that the co-presence of one’s partner increases the experience of the virtual environment (VE) as a place.

As DIVE is an established benchmark and to aid comparison to previous studies [Frecon, 2004; Frécon et al., 2001; Greenhalgh et al., 2001; Mortensen et al., 2002; Schroeder et al., 2001; Steed et al., 2001] it was adopted for this research.

4.4 The Virtual Gazebo Benchmark

The aim of this research was to study the impact of various levels of immersion on social human communication (SHC) and in particular closely-coupled collaboration. A benchmark was needed that would allow studying SHC during various scenarios of objects sharing (Table 4-1), while connecting various displays over a CVE. A further requirement was that it had to be easy enough to understand for students and other participants in the planed user trials as well as fast enough to be conducted (Figure 4-4). The choice was made to create a simple building task, the Virtual Gazebo.

|

|

|

A gazebo is a simple structure that is often found at a vantage point or within a garden (Figure 4-2). A typical working environment contains materials, tools (Figure 4-3) and users. Wooden beams may be inserted in metal feet and united with metal joiners. Screws fix beams in place and planks may be nailed to beams. Tools are used to drill holes, tighten screws and hammer nails. To complete the Virtual Gazebo, tools and materials must be used in various scenarios of shared object manipulation, distinct in the method of sharing attributes. Scenarios include planning, passing, carrying and assembly (see Table 4-1).

These scenarios are a more detailed taxonomy of the three categorisation of cooperation from Margery et al. [1999]. They described three different levels of cooperation. In level 1 users can perceive and communicate with each other (see Figure 4-5a), while in level 2 they can individually modify the scene (see Figure 4-5b and Figure 4-5c). Within level 3, where the users can concurrently interact with the same object, we made a distinction between actions that are co-dependent (see Figure 4-5d) and those that have no direct effect on the others user action (see Figure 4-5e). The Virtual Gazebo study extended and clarified level 3 by the distinction between sequential and concurrent sharing of the same and different object attributes.

The Virtual Gazebo as a simple building task is easy enough to understand for novice users. However, a key requirement was the use of closely-coupled collaboration of two or more partners. In the real world this would be natural due to gravity and other constrains, in contrast, virtual environments could avoid these constrains making it difficult to study such collaboration. User would be able to finish the whole task on there own. To avoid this and to give the benchmark application a certain feeling of reality, constrains such as gravity, building order or the usage of tools were implemented as an integral part of the Virtual Gazebo.

|

|

|

|

|

|

The implementation of the benchmark allows measuring application details (e.g. message transfer) to better understand network and consistency issues. These are in detail discussed in a parallel work [Wolff, 2006]. Measurements also allow the evaluation of task performance while questionnaires try to understand user perception. In addition, observations are used to gain further knowledge on the processes that are involved in closely-coupled collaboration between distributed places.

4.5 Summary

The development of the Virtual Gazebo benchmark application has demonstrated that users, sharing the manipulation of objects, can adapt to the limited effects of remoteness between networked CAVE-like displays. Limiting these effects, however, required considerable effort in application development and deployment. Although many CVEs provide mechanisms for dealing with the effects of remoteness, these are barely sufficient for such linkups and require a combination of application constraints and workarounds as well as fine tuning of event communication. CVEs have been routinely used for linking desktop display systems over a decade, but the use of immersive displays allows better communication and interaction while introducing new challenges. This concurs with earlier work by Slater et al. [2000a] that it is easier to collaborate with a remote user when their avatar is driven by tracking data. IPTs are different because the users are tracked and the communication of tracked human movement is data-intensive. This problem is exacerbated by the very different data requirements of shared object manipulation, where occasional vital events must be sent reliably and in order, often coincident with bursts of non-vital movement events.

In addition, one would have expected verbal communication between remote users to become more natural when the technology is transparent, that is when the microphone and speakers are hidden. However, a significant increase in verbal communication could be observed when the user is constantly aware of a familiar communication device, that is, a headset with microphone and earphones. When this was introduced, the team seamed to work together more successfully and were more engaged in the task.

[1] Object oriented high level 3D graphics library

Deutsch

Deutsch  English (UK)

English (UK)