Chapter 5: Testing the benchmark in immersive and desktop displays

This chapter describes employment and user trials of the benchmark application introduced in the previous chapter. The benchmark has been used over the last few years by this research team to study human interaction and more specifically closely-coupled collaboration in CVEs. The structure of this and the following chapters follows the development and research timeline of the last few years, in order to demonstrate the research process of how and why certain user trials where undertaken.

Hypothesis-1: Immersive displays (CAVEs) are suited for closely-coupled collaboration

A study by Broll in the mid-nineties, concluded that concurrent shared manipulation of objects in a CVE would not be possible with technology at that time, due to delays caused by distribution [Broll, 1995]. At the beginning of this research in late 2001 the problem was still not addressed, although some research was trying to investigate the possibilities of distributed collaboration, at first using single desktop system (e.g. [Ruddle et al., 2002]) and later through networked immersive displays (e.g.[Linebarger et al., 2003; Mortensen et al., 2002]). Therefore, in order to examine distinct scenarios of sharing the manipulation of an object, a benchmark application was developed and put on trial. The benchmark, the Virtual Gazebo, and its development is described in detail in the previous Chapter 4.

Summary

A degree of co-presence has long been supported by CVEs, however, the realism of shared object manipulation has, in the past, been hampered by interface and network delays. We have shown that a task requiring various forms of shared object manipulation is achievable with today’s technology. This task has been undertaken successfully between remote sites on many occasions, sometimes linking up to three remote CAVE-like displays and multiple desktops. Detailed analysis has focussed on team performance and user evaluation.

Team Performance

Using the Virtual Gazebo application, novice users adapt quickly to remoteness of peers and the interface. Typically after three sessions their performance efficiency doubles, approaching that of expert users. Immersive users can undertake most parts of the task far more efficiently than their desktop counterparts. The Virtual Gazebo task requires collaboration at numerous points. This means that a faster user must often wait for the slower one to catch up before beginning the next step. Schroeder et al. [Schroeder et al., 2001] found that the perception of collaboration is affected by asymmetry between users of the different systems. Our results show that the time taken to complete a collaborative task is also affected. When roles in the Virtual Gazebo task are ill-defined, the performance of the team approaches that of the weakest member. However, the performance is greatly increased when the immersed user undertakes the more difficult part of every task.

User evaluation

The user evaluation of the two distinct methods of object sharing is summarised in Table 5-1.

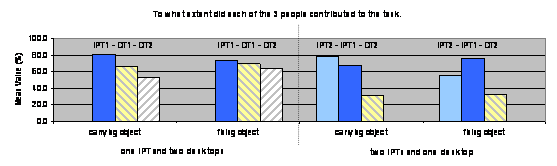

The findings of the questionnaire confirm that the perception of contribution is affected by asymmetry (immersive vs. non-immersive) of linked displays when carrying a beam. However, this is clearly not the case when fixing a beam (Figure 5-1). This suggests that the interface plays a major role during the sharing of an object’s attribute and a minor role when sharing an object through distinct attributes. Surprisingly, neither the interface, nor the form of object sharing, is perceived to affect the level to which the remote user hindered the task. This appears to contradict the results of the performance analysis above. From the perspective of immersed users, collaboration is considerably easier with a symmetric user and the next Chapter 6 will look into this. However, a desktop user found the type of remote display to play little part in the level of collaboration. Chapter 7 discusses in more detail the influence of displays and interfaces on the performance and perception of a single user. It will demonstrate that there is a significant difference in the perception between low and high immersive displays which influences behaviour and interaction.

Workflow

The Virtual Gazebo benchmark is a highly collaborative application which involves all forms of closely-coupled collaboration and for a successful completion it is important to ensure a continuing workflow. It is not only important to enable users to manipulate the objects intuitively, but also to support them with different communication methods. The evaluation results also show that social communication is essential for such a task (e.g. to overcome issues with interface and application) and that each aspect of this, such as verbal, non-verbal communication or interaction with and around objects, should be involved. The results demonstrate that verbal communication is important for synchronising interaction and therefore completing the task successfully. Like in a related study by Hindmarsh et al. [2000] the observations and results show that desktop restrictions and remote behaviour do influence peoples workflow as it makes it more difficult for other users to see the relationship between the acting user and his/her object of interest. In contrast, the activity of immersed users is easier to interpret as they directly interact with objects or other people. However, the question that remains is how much non-verbal support, for example gestures, can and should be implemented without complicating the users interface.

Deutsch

Deutsch  English (UK)

English (UK)